7. Confidence Interval

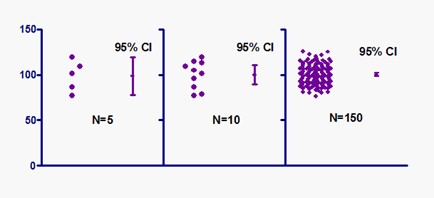

Figure 1: Effect of increased sample size on precision of confidence interval (source https://www.simplypsychology.org/confidence-interval.html)

Confidence intervals (CI) provide different information from what arises from hypothesis tests. Hypothesis testing produces a decision about any observed difference: either that it is ‘statistically significant’ or that it is ‘statistically nonsignificant’. In contrast, confidence intervals provide a range about the observed effect size. This range is constructed in such a way as to determine how likely it is to capture the true – but unknown – effect size.

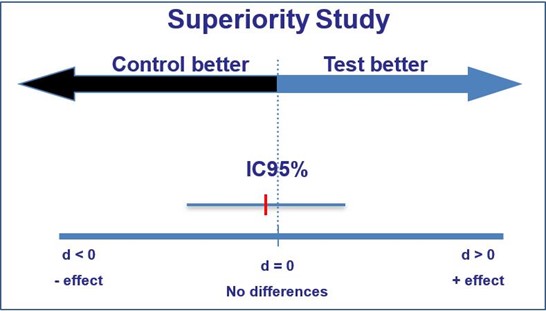

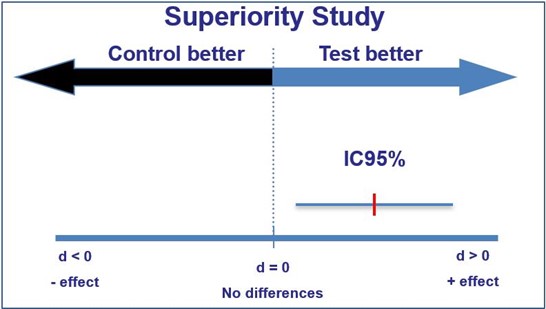

The following graphs exemplify how the confidence interval can give information on whether or not statistical significance for an observation has been reached, similar to a hypothesis test:

If the confidence interval captures the value reflecting ‘no effect’, this represents a difference that is statistically non- significant (for a 95% confidence interval, this is non-significance at the 5% level).

If the confidence interval does not enclose the value reflecting ‘no effect’, this represents a difference that is statistically significant (again, for a 95% confidence interval, this is significance at the 5% level).

In addition to providing an indication of ’statistical significance’, confidence intervals show the largest and smallest effects that are likely, given the observed data, and thus provide additional useful information.